So what is HorizontalPodAutoscaler (HPA)?

The HPA is a Kubernetes component that allows you to automatically scale your applications based on CPU/Memory utilization, as well as other metrics. It is a very important feature that can help you save money and resources by scaling your application up and down based on the load, instead of having it run at a fixed size all the time.

The HPA is available in two versions: autoscaling/v1 and autoscaling/v2.

In this article, we'll cover only the second version of HPA. The first version is still available, but it's very limited in terms of functionality, which makes it not very useful for most use cases. The second version is also the most popular one, so it's the one we'll focus on.

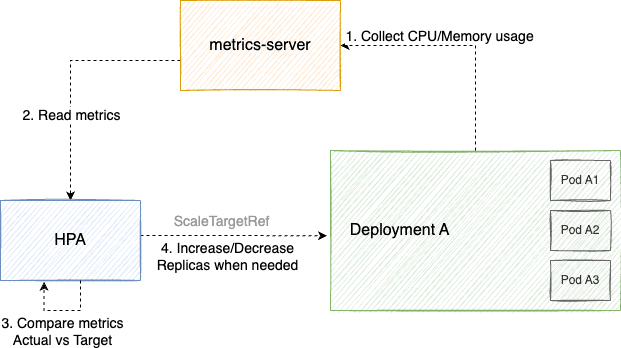

Here's a quick diagram that shows how the HPA interacts with other components:

- The metrics-server collects metrics from all pods via kubelet, and makes them available via the Kubernetes Metrics API.

- The HPA uses the Metrics API to get current metrics for the pods it's managing. This happens in a loop, every 15 seconds by default.

- The HPA compares the current metrics with the target metrics, and decides if it needs to scale the application up or down.

- If the HPA decides to scale the application, it will update the

spec.replicasof the target deployment, which will trigger a rolling update.

Requirements before using HPA

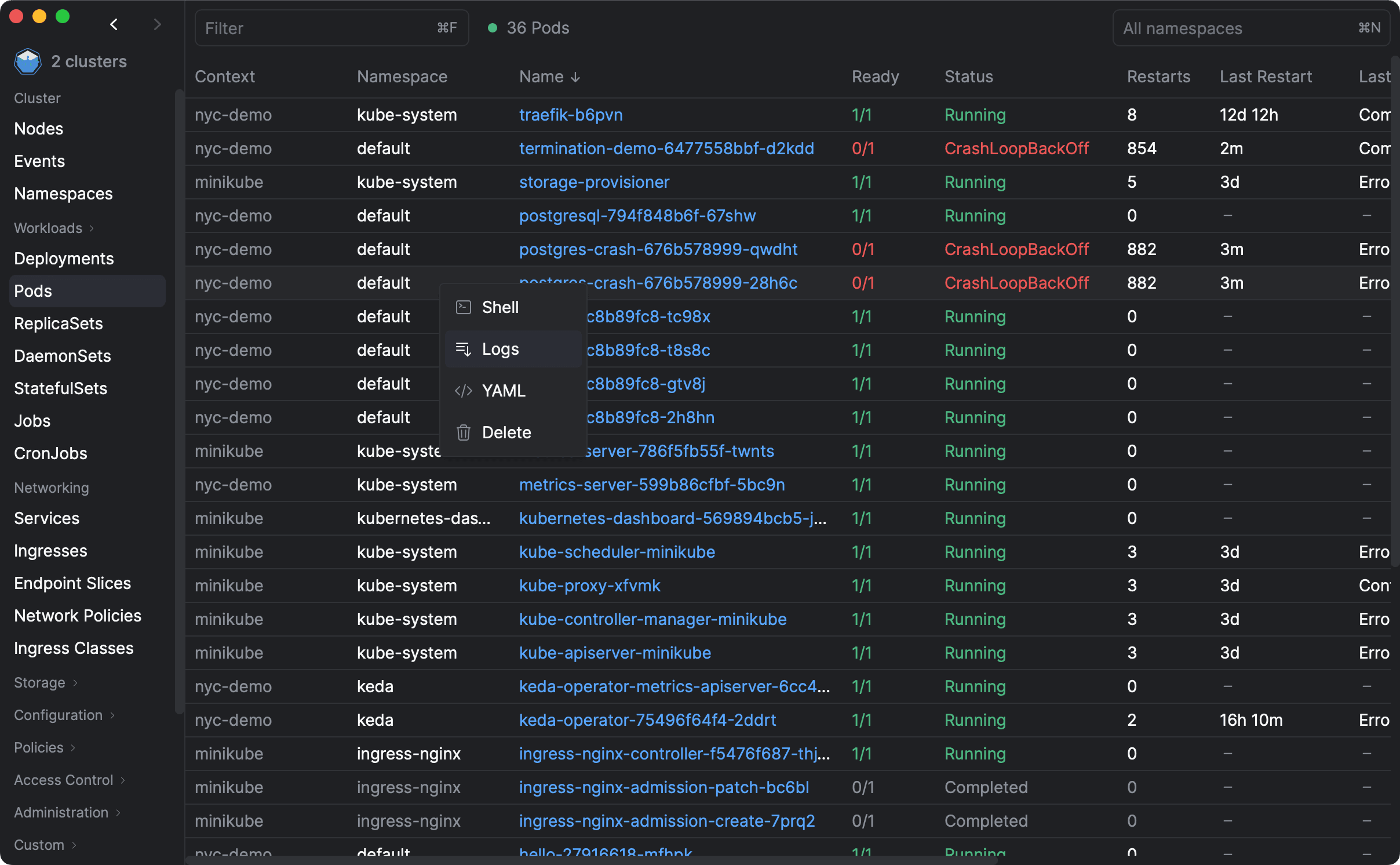

Metrics Server

If you look at the diagram above, you'll notice that the HPA has a hard dependency on the Kubernetes Metrics API. This means that you need to have a metrics server installed in your cluster before you can use the HPA.

If you're using a managed Kubernetes service like GKE, EKS, AKS, etc., then in most cases you don't need to worry about this, as the metrics server is already installed for you. But if it's not, then you'll need to install it yourself.

Tip: Want to know if there's a metrics server installed in your cluster? Run the following command:

kubectl top pods -A

If you get a list of pods with their CPU and memory usage, then you have a metrics server installed. If not, then you don't and you'll need to install it yourself.

It's outside the scope of this article to explain how to install a metrics server, but you can either use metrics-server or prometheus-adapter, which are the two most popular options. Use prometheus-adapter if you have prometheus as part of your stack, otherwise use metrics-server.

Cluster Autoscaler (CA)

The HPA is a great tool for scaling your applications, but it's not enough on its own. There are times when the HPA can't scale your application because there are no available nodes in the cluster. If there are not enough resources in your cluster to schedule a new pod, then the HPA will have limited scaling capabilities and won't be used to its full potential.

This is precisely where the Cluster Autoscaler (CA) comes in, which is a fantastic combination with the HPA. The CA is a Kubernetes component that automatically provision new nodes in your cluster based on the number of pending pods. When the pods are no longer needed, the HPA will instruct the cluster to remove the pods, which in turn will trigger the CA to remove the nodes.

Once again, if you're using a managed Kubernetes service, then you most likely have this installed for you already. You will need to configure it with things like the minimum and maximum number of nodes in your cluster, but that's all. Easy peasy!

Creating your first HPA

To create a HorizontalPodAutoscaler, you only need:

- TargetScaleRef: a Deployment or ReplicaSet which will be managed by the HPA.

- Min/Max Number of Replicas: the minimum and maximum number of replicas that the HPA can scale out to.

- Metrics: a list of metrics that will be used to scale the application.

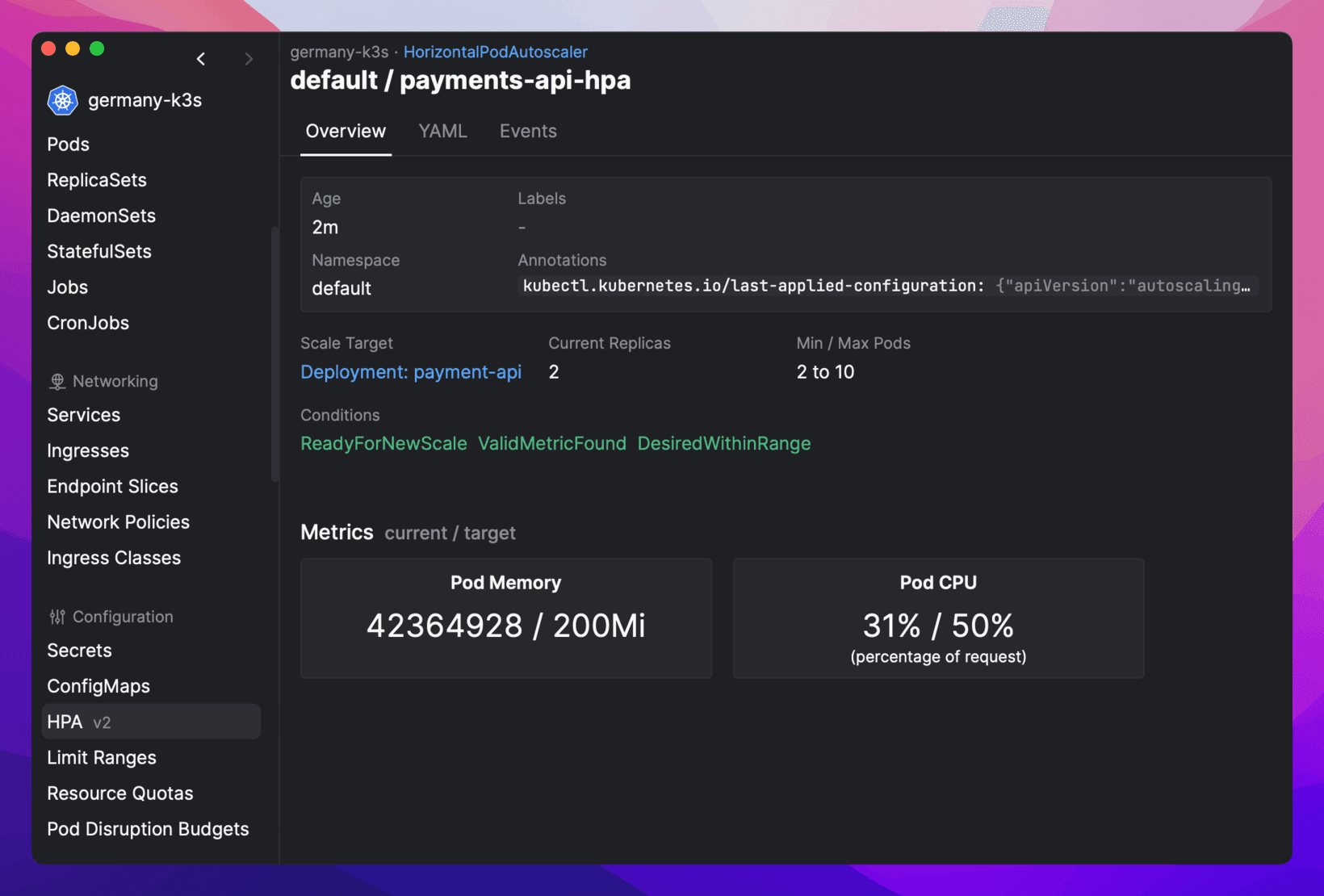

Here's an example of a HorizontalPodAutoscaler that scales the payments-api deployment based on CPU and Memory usage:

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: payments-api-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: payments-api

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 50

- type: Resource

resource:

name: memory

target:

type: AverageValue

averageValue: 200Mi

When the average CPU utilization of the pods in the payments-api deployment reaches 50%, or the average memory usage is higher than 200Mi, the HPA will scale out the deployment up to a maximum of 10 replicas. When both CPU/Memory usage is below the target, the HPA will scale in the deployment back to the minimum number of replicas.

When using HPA, it's common to hit a few different errors that may seem confusing at first. We've also written a separate article that covers the most common HPA errors and how to fix them. Make sure to check it out if you're having trouble with your HPA.

Scaling based on other metrics

CPU and Memory are the most common metrics used to scale applications, but they're not the only ones. You can also scale your applications based on custom and/or external metrics.

Custom Metrics are metrics that come from applications running on the Kubernetes cluster. The most common example is the number of requests per second (RPS). That allows you to scale your application based on the number of requests it's receiving, instead of CPU/Memory usage. So if you know that your application can handle 1000 requests per second, you can set the HPA to scale out when the number of requests is higher than 1000 per pod.

External metrics are metrics that represent the state of a service that is running outside of the Kubernetes cluster, such as a database or a message queue in AWS, GCP, Azure, etc. This is useful when you want to scale your application based on the number of messages in a queue, for example.

KEDA is a very popular tool that allows you to scale your applications based on dozens of different external metrics. We highly recommend checking out if you're using queues (like AWS SES or Azure Service Bus), Kafka or other cloud services.