Hi there! 👋

If you found this page on Google, chances are that you are trying to fix the couldn't get resource list for error message using Kubectl. If that's the case, you are in the right place!

This post may not exactly solve your problem, but it will hopefully give you some pointers on how to troubleshoot and fix it.

couldn t get resource list for XYZ: the server is currently unable to handle the requestXYZ could be anything, but it's usually external.metrics.k8s.io/v1beta1 or metrics.k8s.io/v1beta1. Regardless of the exact error message, the root cause is the same.

Where does this error come from?

Kubernetes API can be extended with Custom Resources that are fully managed by Kubernetes and available to Kubectl and other tools. When you run kubectl get XYZ, Kubectl will fetch the list of resources from the Kubernetes API server. If XYZ is registered as a CRD (CustomResourceDefinition), the API server will fetch from etcd like any other resource, such as Pod, Deployment, etc. This is the most popular way to extend the Kubernetes API because developers don't need to implement any additional logic to manage the resource. You basically get a free CRUD API for your resource.

Alternatively, developers can also extend the Kubernetes API with resources managed by a third-party API server living in the same Kubernetes cluster. If XYZ was managed externally, the API server would forward the request to this third-party API server and return the response to Kubectl. This is the most flexible way to extend the Kubernetes API because developers can implement any logic they want in the third-party API server. However, it also means that the third-party API server needs to be up and running for Kubectl to work.

And that is where the issue usually comes from.

Kubernetes Metrics — be it metrics-server or prometheus-adapter — is a very common cause for this error because metrics are not stored in Etcd like other resources. Instead, they are stored in memory or in prometheus, and then exposed via an API extension. If the API is not available, you will get this error.

You can extend the API Server with external resouces by creating a resource like this:

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

name: v1beta1.foo.com

spec:

group: foo.com

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: foo-apiserver

namespace: foo

port: 443

version: v1beta1

versionPriority: 100

The manifest above tells Kubernetes API to forward all requests for v1beta1.foo.com to the foo-apiserver service. If foo-apiserver is unavailable, you will get the couldn't get resource list for foo.com/v1beta1: the server is currently unable to handle the request error message.

So what's wrong with my service?

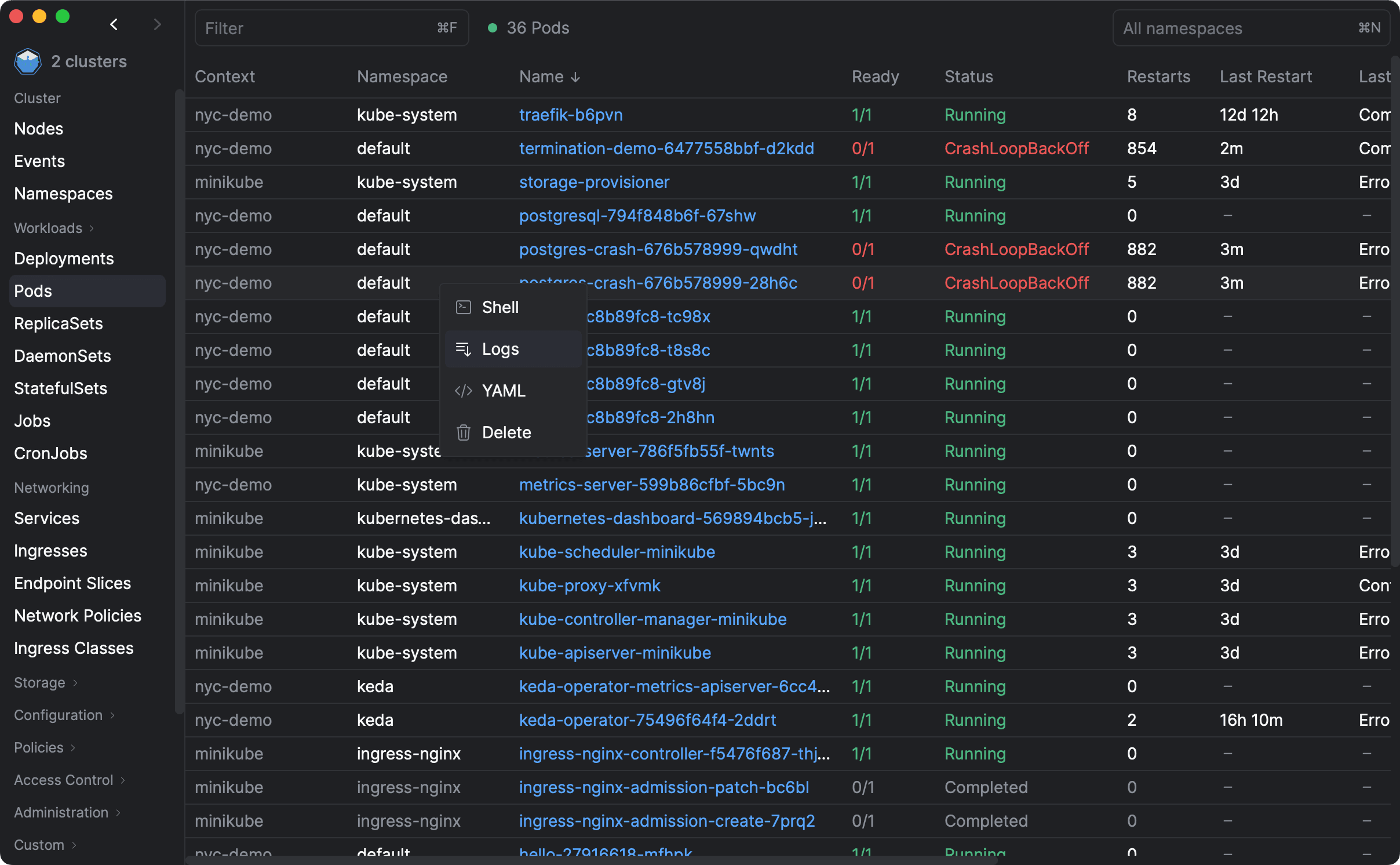

The first thing you should do is run kubectl get apiservice, which will list all Local and External APIServices registered in your cluster. The information we're looking for on this table is all the APIServices where Available is False.

For instance, this is what I have (with a lot of irrelevant APIServices removed):

NAME SERVICE AVAILABLE AGE

v1. Local True 62d

v1.admissionregistration.k8s.io Local True 62d

v1.apiextensions.k8s.io Local True 62d

v1.apps Local True 62d

v1.authentication.k8s.io Local True 62d

v2beta2.autoscaling Local True 62d

v1.authorization.k8s.io Local True 62d

v1.autoscaling Local True 62d

v2.autoscaling Local True 62d

v1.batch Local True 62d

...

v1beta1.metrics.k8s.io kube-system/metrics-server True 62d

v1alpha1.foo.com Local True 6d5h

v1beta1.external.metrics.k8s.io keda/keda-operator-metrics-apiserver False (MissingEndpoints) 62d

v1beta1.foo.com foo/foo-apiserver False (ServiceNotFound) 7m16sThe Available column also gives you a hint to what's wrong with the service, but you can get further information by using kubectl get apiservice v1beta1.external.metrics.k8s.io -o yaml:

status:

conditions:

- lastTransitionTime: "2023-04-24T10:34:12Z"

message:

endpoints for service/keda-operator-metrics-apiserver in "keda" have

no addresses with port name "https"

reason: MissingEndpoints

status: "False"

type: Available

The status property is really what you want to look at. Here are the possible values for the reason property when the API Service is unavailable:

- ServiceNotFound: The service is not found in the cluster, you many have pods for the API, but the service is missing or misconfigured. Double check if the name and namespace of the service are correct.

- ServiceAccessError: The service is found, but the API server can't access it for some reason which is explained in the

messageproperty. - ServicePortError: The service is not listening on the port specified in the APIService manifest.

- EndpointsNotFound: There are no Pods on the scope of the service. Check if the deployment is running, if the selectors are correct and if the service is pointing to the right deployment.

- EndpointsAccessError: The API server can't access the endpoints for some reason which is explained in the

messageproperty. - MissingEndpoints: The service is found, there are endpoints for it, but none of them match the correct port.

Conclusion

Hopefully the information above will help you on your troubleshooting journey. If nothing else, it will give you a better understanding of what's going on behind the scenes. Knowing that this error is related to pods and services running in your cluster is already a big step forward.